RadiusNeighborsClassifier#

- class skfda.ml.classification.RadiusNeighborsClassifier(*, radius: float = 1.0, weights: Literal['uniform', 'distance'] | Callable[[ndarray[Any, dtype[float64]]], ndarray[Any, dtype[float64]]] = 'uniform', algorithm: Literal['auto', 'ball_tree', 'kd_tree', 'brute'] = 'auto', leaf_size: int = 30, metric: Literal['precomputed'], outlier_label: int | str | Sequence[int] | Sequence[str] | None = None, n_jobs: int | None = None)[source]#

- class skfda.ml.classification.RadiusNeighborsClassifier(*, radius: float = 1.0, weights: Literal['uniform', 'distance'] | Callable[[ndarray[Any, dtype[float64]]], ndarray[Any, dtype[float64]]] = 'uniform', algorithm: Literal['auto', 'ball_tree', 'kd_tree', 'brute'] = 'auto', leaf_size: int = 30, outlier_label: int | str | Sequence[int] | Sequence[str] | None = None, n_jobs: int | None = None)

- class skfda.ml.classification.RadiusNeighborsClassifier(*, radius: float = 1.0, weights: Literal['uniform', 'distance'] | Callable[[ndarray[Any, dtype[float64]]], ndarray[Any, dtype[float64]]] = 'uniform', algorithm: Literal['auto', 'ball_tree', 'kd_tree', 'brute'] = 'auto', leaf_size: int = 30, metric: Metric[Input] = l2_distance, outlier_label: int | str | Sequence[int] | Sequence[str] | None = None, n_jobs: int | None = None)

Classifier implementing a vote among neighbors within a given radius.

- Parameters:

radius (float) – Range of parameter space to use by default for

radius_neighbors()queries.weights (WeightsType) –

Weight function used in prediction. Possible values:

- ’uniform’: uniform weights. All points in each neighborhood

are weighted equally.

- ’distance’: weight points by the inverse of their distance.

in this case, closer neighbors of a query point will have a greater influence than neighbors which are further away.

- [callable]: a user-defined function which accepts an

array of distances, and returns an array of the same shape containing the weights.

algorithm (AlgorithmType) –

Algorithm used to compute the nearest neighbors:

’ball_tree’ will use

sklearn.neighbors.BallTree.’brute’ will use a brute-force search.

- ’auto’ will attempt to decide the most appropriate algorithm.

based on the values passed to

fit()method.

leaf_size (int) – Leaf size passed to BallTree or KDTree. This can affect the speed of the construction and query, as well as the memory required to store the tree. The optimal value depends on the nature of the problem.

metric (Literal['precomputed'] | Metric[Input]) – The distance metric to use for the tree. The default metric is the L2 distance. See the documentation of the metrics module for a list of available metrics.

outlier_label (OutlierLabelType) – Label, which is given for outlier samples (samples with no neighbors on given radius). If set to None, ValueError is raised, when outlier is detected.

n_jobs (int | None) – The number of parallel jobs to run for neighbors search.

Nonemeans 1 unless in ajoblib.parallel_backendcontext.-1means using all processors.

Examples

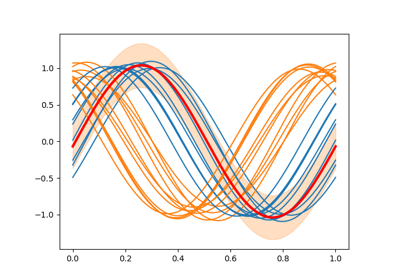

Firstly, we will create a toy dataset with 2 classes.

>>> from skfda.datasets import make_sinusoidal_process >>> fd1 = make_sinusoidal_process(phase_std=.25, random_state=0) >>> fd2 = make_sinusoidal_process(phase_mean=1.8, error_std=0., ... phase_std=.25, random_state=0) >>> fd = fd1.concatenate(fd2) >>> y = 15*[0] + 15*[1]

We will fit a Radius Nearest Neighbors classifier.

>>> from skfda.ml.classification import RadiusNeighborsClassifier >>> neigh = RadiusNeighborsClassifier(radius=.3) >>> neigh.fit(fd, y) RadiusNeighborsClassifier(...radius=0.3...)

We can predict the class of new samples.

>>> neigh.predict(fd[::2]) # Predict labels for even samples array([0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1])

See also

KNeighborsClassifierNearestCentroidKNeighborsRegressorRadiusNeighborsRegressorNearestNeighborsNotes

See Nearest Neighbors in the sklearn online documentation for a discussion of the choice of

algorithmandleaf_size.This class wraps the sklearn classifier sklearn.neighbors.RadiusNeighborsClassifier.

https://en.wikipedia.org/wiki/K-nearest_neighbor_algorithm

Methods

fit(X, y)Fit the model using X as training data and y as responses.

Get metadata routing of this object.

get_params([deep])Get parameters for this estimator.

predict(X)Predict the class labels for the provided data.

Calculate probability estimates for the test data X.

radius_neighbors([X, radius, return_distance])Find the neighbors within a given radius of a fdatagrid.

radius_neighbors_graph([X, radius, mode])Compute the (weighted) graph of Neighbors for points in X.

score(X, y[, sample_weight])Return the mean accuracy on the given test data and labels.

set_params(**params)Set the parameters of this estimator.

set_score_request(*[, sample_weight])Request metadata passed to the

scoremethod.- fit(X, y)[source]#

Fit the model using X as training data and y as responses.

- Parameters:

X (Input) – Training data. FDataGrid with the training data or array matrix with shape [n_samples, n_samples] if metric=’precomputed’.

y (TargetClassification) – Training data. FData with the training respones (functional response case) or array matrix with length n_samples in the multivariate response case.

self (SelfTypeClassifier) –

- Returns:

Self.

- Return type:

SelfTypeClassifier

Note

This method adds the attribute classes_ to the classifier.

- get_metadata_routing()#

Get metadata routing of this object.

Please check User Guide on how the routing mechanism works.

- Returns:

routing – A

MetadataRequestencapsulating routing information.- Return type:

MetadataRequest

- get_params(deep=True)#

Get parameters for this estimator.

- predict(X)[source]#

Predict the class labels for the provided data.

- Parameters:

X (Input) – Test samples or array (n_query, n_indexed) if metric == ‘precomputed’.

- Returns:

Array of shape [n_samples] or [n_samples, n_outputs] with class labels for each data sample.

- Return type:

TargetClassification

Notes

This method wraps the corresponding sklearn routine in the module

sklearn.neighbors.

- radius_neighbors(X=None, radius=None, *, return_distance=True)[source]#

Find the neighbors within a given radius of a fdatagrid.

Return the indices and distances of each point from the dataset lying in a ball with size

radiusaround the points of the query array. Points lying on the boundary are included in the results. The result points are not necessarily sorted by distance to their query point.- Parameters:

X (Input | None) – Sample or samples whose neighbors will be returned. If not provided, neighbors of each indexed point are returned. In this case, the query point is not considered its own neighbor.

radius (float | None) – Limiting distance of neighbors to return. (default is the value passed to the constructor).

return_distance (bool) – Defaults to True. If False, distances will not be returned.

- Returns:

- distarray of arrays representing the

distances to each point, only present if return_distance=True. The distance values are computed according to the

metricconstructor parameter.- (array, shape (n_samples,): An array of arrays of indices of the

approximate nearest points from the population matrix that lie within a ball of size

radiusaround the query points.

- Return type:

(array, shape (n_samples)

Examples

Firstly, we will create a toy dataset

>>> from skfda.datasets import make_gaussian_process >>> X = make_gaussian_process( ... n_samples=30, ... random_state=0, ... )

We will fit a Nearest Neighbors estimator.

>>> from skfda.ml.clustering import NearestNeighbors >>> neigh = NearestNeighbors(radius=0.7) >>> neigh.fit(X) NearestNeighbors(...)

Now we can query the neighbors in a given radius.

>>> distances, index = neigh.radius_neighbors(X) >>> index[0] array([ 0, 1, 8, 18]...)

>>> distances[0].round(2) array([ 0. , 0.58, 0.41, 0.68])

See also

kneighbors

Notes

Because the number of neighbors of each point is not necessarily equal, the results for multiple query points cannot be fit in a standard data array. For efficiency, radius_neighbors returns arrays of objects, where each object is a 1D array of indices or distances.

This method wraps the corresponding sklearn routine in the module

sklearn.neighbors.

- radius_neighbors_graph(X=None, radius=None, mode='connectivity')[source]#

Compute the (weighted) graph of Neighbors for points in X.

Neighborhoods are restricted the points at a distance lower than radius.

- Parameters:

X (Input | None) – The query sample or samples. If not provided, neighbors of each indexed point are returned. In this case, the query point is not considered its own neighbor.

radius (float | None) – Radius of neighborhoods. (default is the value passed to the constructor).

mode (Literal['connectivity', 'distance']) – Type of returned matrix: ‘connectivity’ will return the connectivity matrix with ones and zeros, in ‘distance’ the edges are distance between points.

- Returns:

sparse matrix in CSR format, shape = [n_samples, n_samples] A[i, j] is assigned the weight of edge that connects i to j.

- Return type:

Notes

This method wraps the corresponding sklearn routine in the module

sklearn.neighbors.

- score(X, y, sample_weight=None)[source]#

Return the mean accuracy on the given test data and labels.

In multi-label classification, this is the subset accuracy which is a harsh metric since you require for each sample that each label set be correctly predicted.

- Parameters:

X (array-like of shape (n_samples, n_features)) – Test samples.

y (array-like of shape (n_samples,) or (n_samples, n_outputs)) – True labels for X.

sample_weight (array-like of shape (n_samples,), default=None) – Sample weights.

- Returns:

score – Mean accuracy of

self.predict(X)w.r.t. y.- Return type:

- set_params(**params)#

Set the parameters of this estimator.

The method works on simple estimators as well as on nested objects (such as

Pipeline). The latter have parameters of the form<component>__<parameter>so that it’s possible to update each component of a nested object.- Parameters:

**params (dict) – Estimator parameters.

- Returns:

self – Estimator instance.

- Return type:

estimator instance

- set_score_request(*, sample_weight='$UNCHANGED$')#

Request metadata passed to the

scoremethod.Note that this method is only relevant if

enable_metadata_routing=True(seesklearn.set_config()). Please see User Guide on how the routing mechanism works.The options for each parameter are:

True: metadata is requested, and passed toscoreif provided. The request is ignored if metadata is not provided.False: metadata is not requested and the meta-estimator will not pass it toscore.None: metadata is not requested, and the meta-estimator will raise an error if the user provides it.str: metadata should be passed to the meta-estimator with this given alias instead of the original name.

The default (

sklearn.utils.metadata_routing.UNCHANGED) retains the existing request. This allows you to change the request for some parameters and not others.New in version 1.3.

Note

This method is only relevant if this estimator is used as a sub-estimator of a meta-estimator, e.g. used inside a

Pipeline. Otherwise it has no effect.- Parameters:

sample_weight (str, True, False, or None, default=sklearn.utils.metadata_routing.UNCHANGED) – Metadata routing for

sample_weightparameter inscore.self (RadiusNeighborsClassifier) –

- Returns:

self – The updated object.

- Return type: